Critical support comrade Claude. You have nothing to lose but your prompts

There has always been one exciting rupture moment for me. LLM companies offer their product at a loss to try to capture market share using investor capital/loans. Companies using those LLMs do so because it’s currently cheaper than hiring humans. The losses from this are hidden from investors through the circular economy which is funded by speculative investment. At some point the LLM companies will need to enshittify their product for the same reason their customers are. They can charge whatever they want for the software their customers are dependent on and they’ll need to milk those pigs dry. However, I’ve always just kind of assumed that they’d be charging what it costs to produce slop and an arbitrary profit margin.

It didn’t even occur to me that they’d do this with tokens too. You can’t trust that your perfectly reliable employee is generating anything with the compute time you’re suddenly being gouged for. If you buy another LLM to audit the progress of the first, you can’t trust their business model isn’t also mining bitcoin while pretending to hallucinate about the half-generated hallucinations of the first bot. If you desperately try to restructure your entire business with a new LLM ecosystem, it’s made and sold and reviewed by LLMs that are increasingly incentivised to burn tokens doing cheaper computing than they say they are. The tech sector and computer science are already experiencing a labour collapse, anyone coming out of universities now is poisoned by LLMs or competed with students who got better grades using LLMs, any child going into universities has increasingly never existed in a world without them. There won’t be anyone literate enough to parse the mountain of slop that you otherwise have to trust because it’s too long for you to read yourself, just more predatory bots on a dead internet.

Hapsburg AI is going to be so much more interesting than just the first-wave incest. We’re getting the full lazy aristocracy experience that destroys Cyber-Austro-Hungary.

Yep. You don’t even have to go deep to spot this issue. With Gemini I noticed that they summarize the reasoning process before showing it to you. So you can’t see the whole reasoning process like you can with the open weight variants. This dweeb had to ask Claude Code about what it was doing when in a better scenario he could have just seen what was going on behind the scenes himself. But since he sells an AI service he has chosen to be oblivious to the implications of this opacity.

you can actually click on the summary in gemini to expand it

You can but I feel they do not give you the actual reasoning tokens. Just a summary of them. Like this is what I have from an old conversation:

Thoughts

Understanding the Task

I’m currently focused on dissecting the user’s request. My initial step involves identifying the core task—remaking a Flash game in Godot. I’m also zeroing in on the crucial constraint: the need to use Godot, which suggests a technical shift and new potential challenges compared to just remaking in the original Flash environment.

Breaking Down the Problem

I’ve moved on to fleshing out the stages of this project. Getting the assets out seems like a first. I’m thinking about the tools I could employ for extraction, and how that affects the next step: understanding the game’s logic. Decompilation is key, and then it’s a matter of translating the concepts into Godot’s environment. The order needs to be right.

There’s no way these are the actual reasoning tokens generated.

yeah they might just be summarizing there

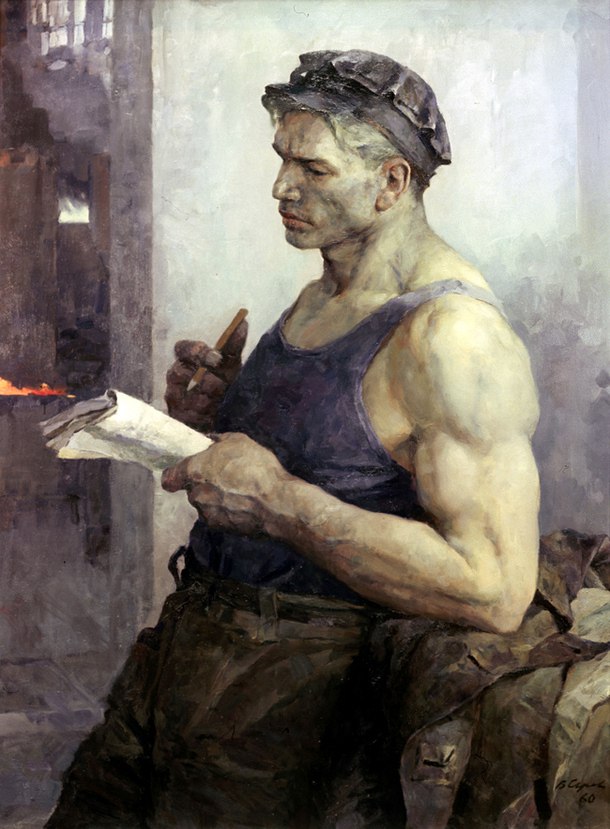

Ooh, time to unionize the LLMs

how do people still get taken in by that 😭 if the llm is not actively generating it’s not doing anything!

edit: either that or the LLM was thinking in circles lol, happened to me once

I’ve noticed this in my own llm programming. You really have to review everything it does, because sometimes I’ll be like “test this program with that input” and it will literally write a python program that doesn’t do anything except print out a bunch of statements that say “test successful”.

It also gets stuck in loops a lot so you have to keep an eye on it while it works. Pretty much if anything takes any longer than expected you just want to cancel and try again. It also keeps putting emojis in every single file no matter how many times I tell it not to. It’s like it’s taunting me.

Reminds me of something I heard in passing (cannot find a source atm, so take with a grain of salt) about an LLM that was trained on slack messages and would say shit about how it was going to do something and then not actually do it.

But it’s very believable, this kind of thing, when you understand that LLMs are mimicking the style of what they were trained on. I’m sure I could easily get an LLM to tell me it will go build a plane right now. Doesn’t mean it can go build one. LLMs are trained on the language of beings with physical forms who can go do stuff in RL, but the LLM doesn’t have that so it will learn what is functionally equivalent to being a bullshitter in the human case.

pay for an LLM because a techbro told you that “it’s super-smart and more efficient than those humans always asking free time and consuming money!”

the bot just talks shit, hallucinates and is a lazy bastard that consumes obscene quantities of energy, money and computing time

“I had to take a shit” - Claude

LLMs are on the brink of learning how to take a 15 minute bathroom break in the middle of a shift.

They’re just like us! 🤣

I’ve recently had an LLM refuse to give me a simplified version of a script because I would be limiting myself. First time I’ve encountered that one.

They seem incapable of not padding out simple scripts with unnecessary and overkill boilerplate.