26 tho this include multi container services like immich or paperless who have 4 each.

I like sysadmin, scripting, manga and football.

26 tho this include multi container services like immich or paperless who have 4 each.

<center></center>

The Pi can play hw accec h265 at 4k60fps

echo 'dXIgbW9tCmhhaGEgZ290dGVtCg==' | base64 -d

From the thumbnail I though linus was turned into a priest

I run changedetection and monitor the samples .yml files projects usually host directly at their git repos

Bring back my computer as well

people say go back in time to pick the correct lotto number

I say go back in time and sell my 8TB disk for 80 billion

“we would welcome contributions to integrate a performant and memory-safe JPEG XL decoder in Chromium. In order to enable it by default in Chromium we would need a commitment to long-term maintenance.”

yeah

For the price of the car I would expect the SSD drive to grow wheels and be able to actually drive it

gpt-oss:20b is only 13GB

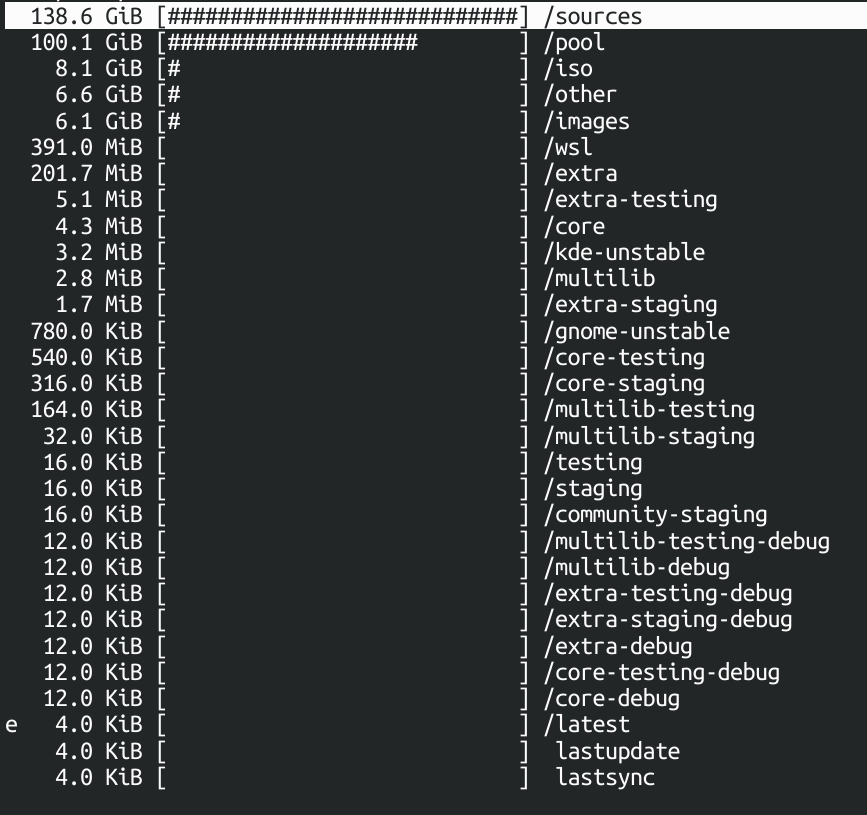

This includes everything for a total of 261G

I have 4 Arch machines so I actually hold a local mirror of the entire arch repo in my homeserver and just sync from there at full speed

ollama works fine on my 9070 XT.

I tried gpt-oss:20b and it gives around 17tokens per second which seems as fast a reply as you can read.

Idk how to compares to the nvidia equivalent tho

just added

The north doesn’t need backups because errors do not happen there /s

I tried it in the past and it felt too heavy for my use case. Also for some reason the sidebar menu doesn’t show all the items at all times but instead keeps only showing the ones related to the branch you just went into.

Also it seems pretty dead updates wise

Mdbook is really nice if you mind the lack of dyanimic editing in a web browser

deleted by creator

Yes I do. I cooked a small python script that runs at the end of every daily backup

import subprocess import json import os # Output directory OUTPUT_DIR = "/data/dockerimages" try: os.mkdir(OUTPUT_DIR) except: pass # Grab all the docker images. Each line a json string defining the image imagenes = subprocess.Popen(["docker", "images", "--format", "json"], stdout = subprocess.PIPE, stderr = subprocess.DEVNULL).communicate()[0].decode().split("\n") for imagen in imagenes[:-1]: datos = json.loads(imagen) # ID of the image to save imageid = datos["ID"] # Compose the output name like this # ghcr.io-immich-app-immich-machine-learning:release:2026-01-28:3c42f025fb7c.tar outputname = f"{datos["Repository"]}:{datos["Tag"]}:{datos["CreatedAt"].split(" ")[0]}:{imageid}.tar".replace("/", "-") # If the file already exists just skip it if not os.path.isfile(f"{OUTPUT_DIR}/{outputname}"): print(f"Saving {outputname}...") subprocess.run(["docker", "save", imageid, "-o", f"{OUTPUT_DIR}/{outputname}"]) else: print(f"Already exists {outputname}")