StabilityAI’s newest diffusion model (up to the newly announced Stable Diffusion 3, that is!), StableCascade, is said to produce images faster and better than SDXL via addition of an extra diffusion stage. But how is it in practice?

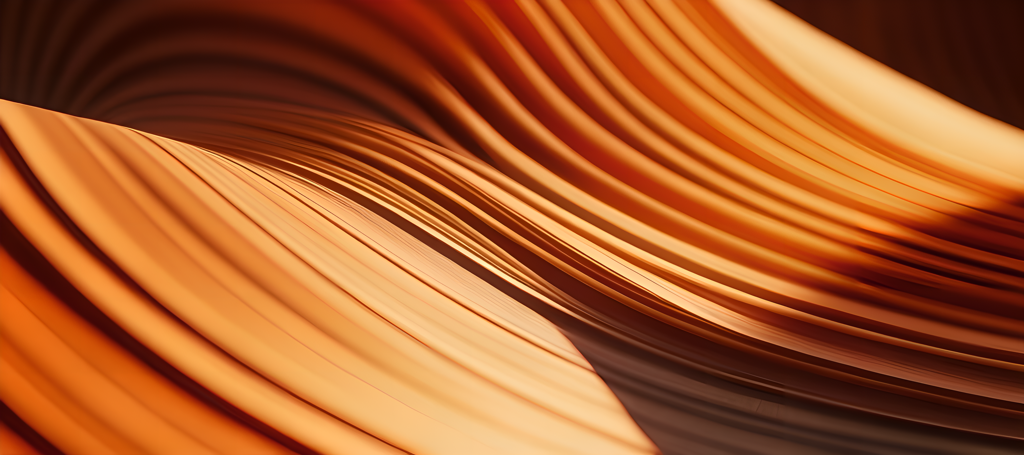

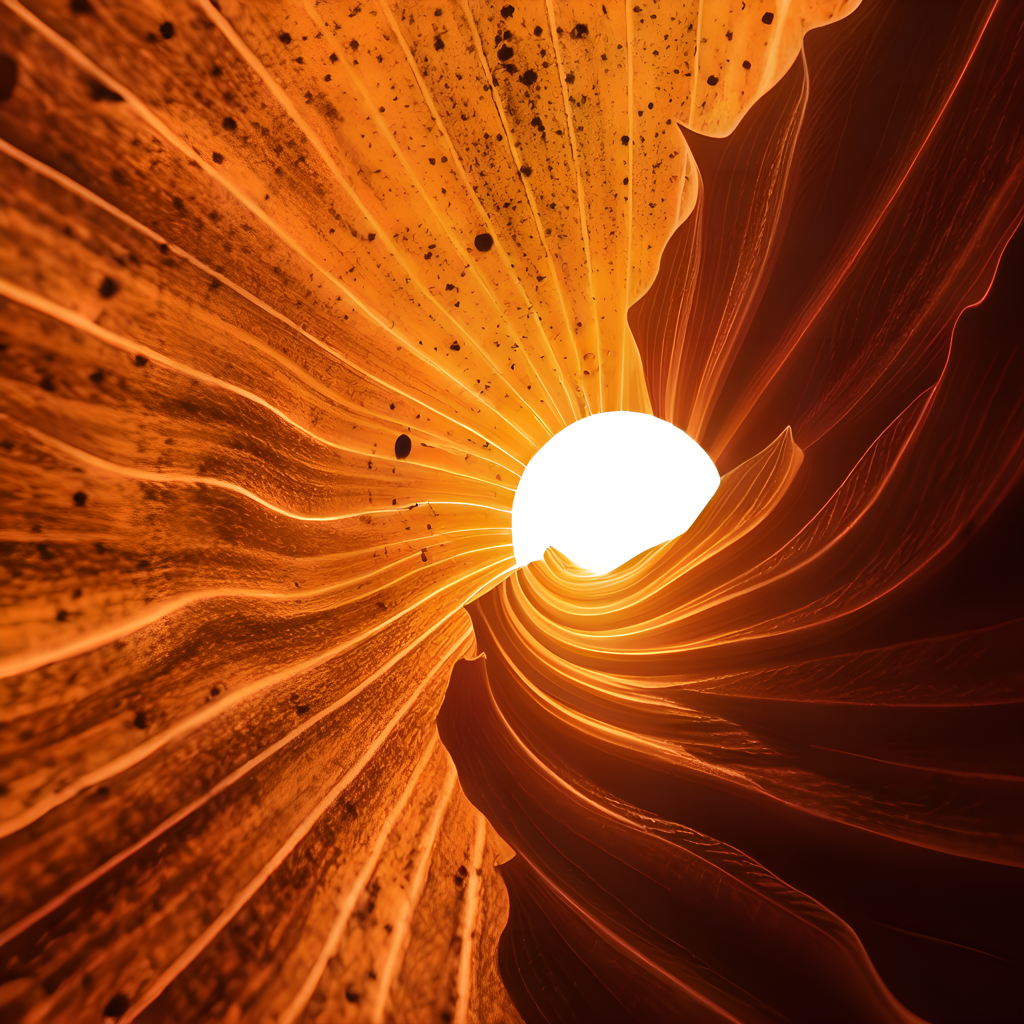

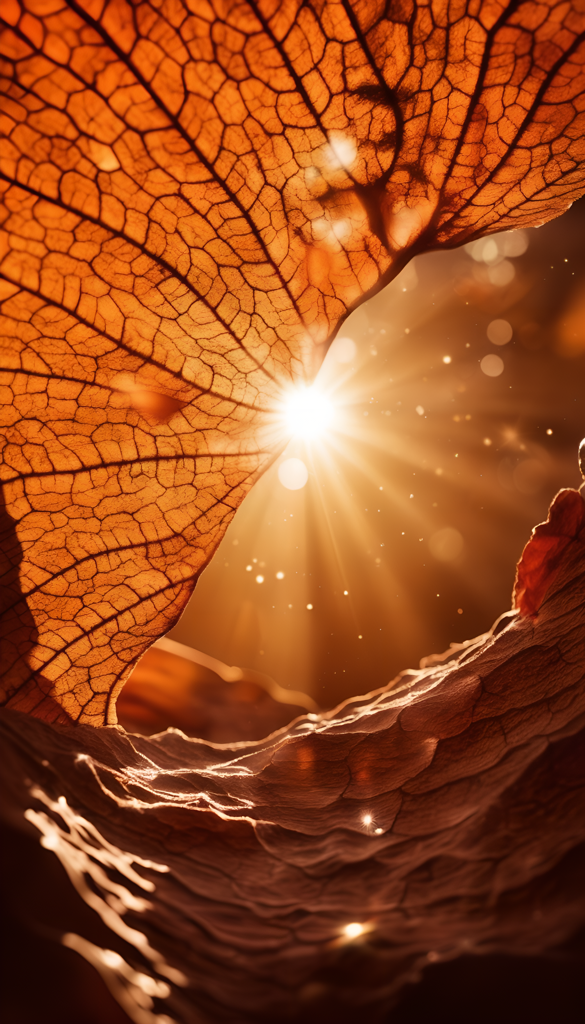

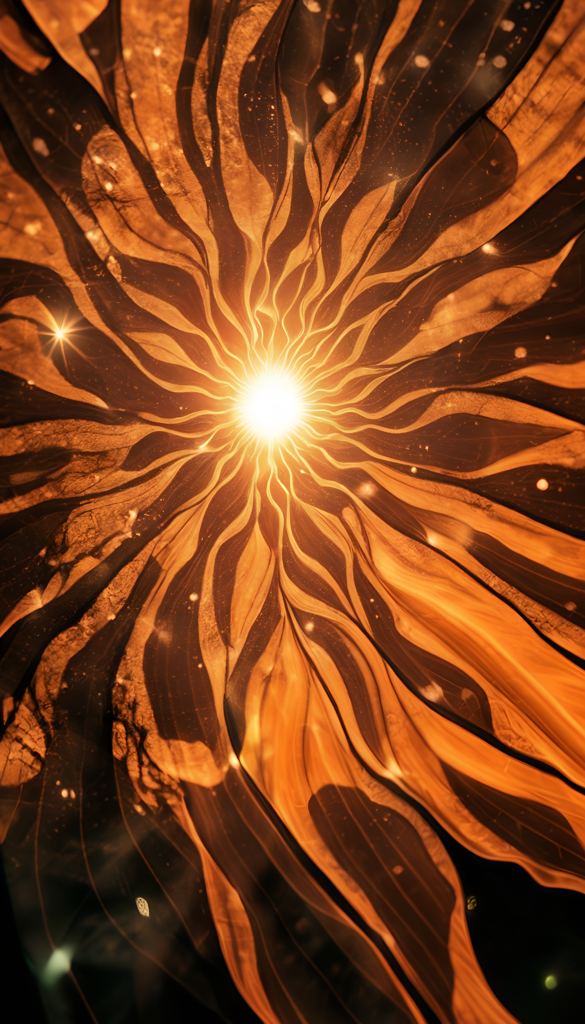

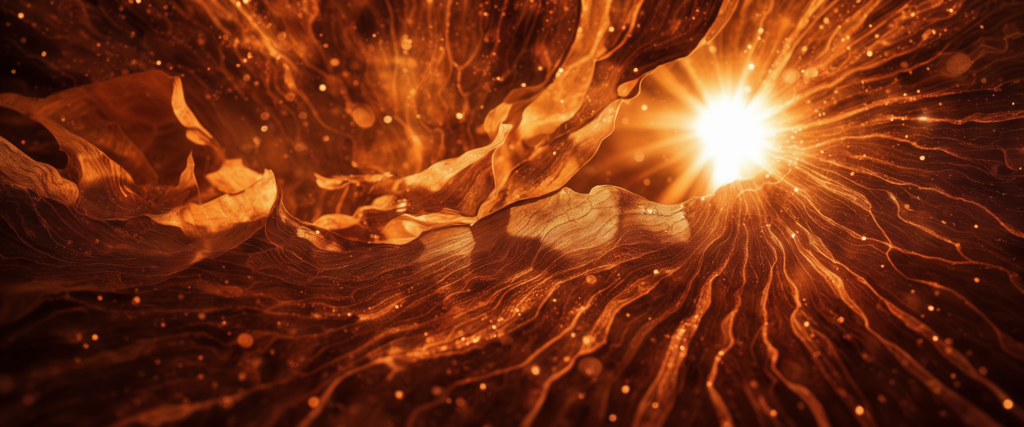

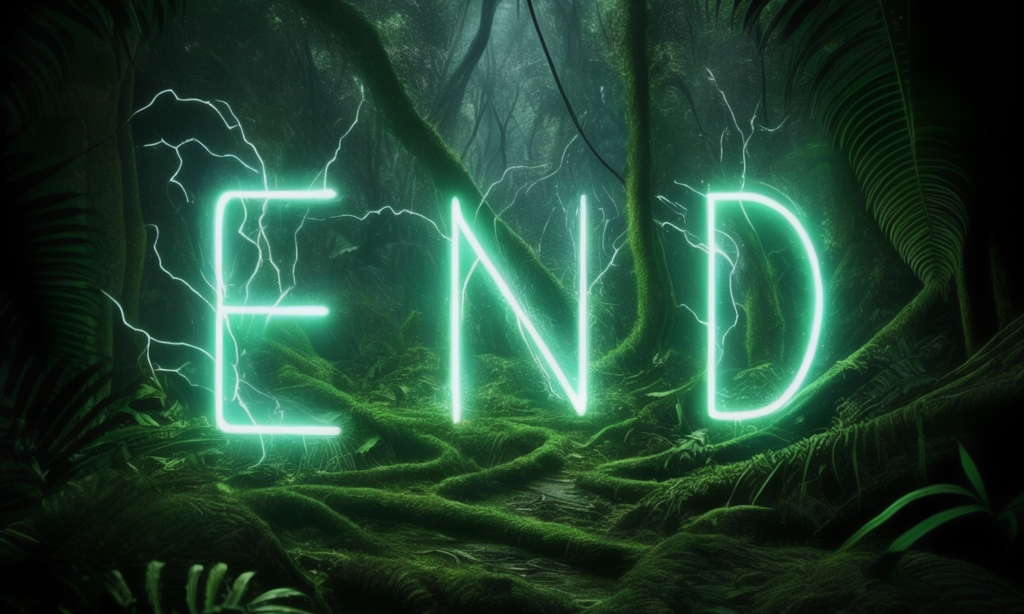

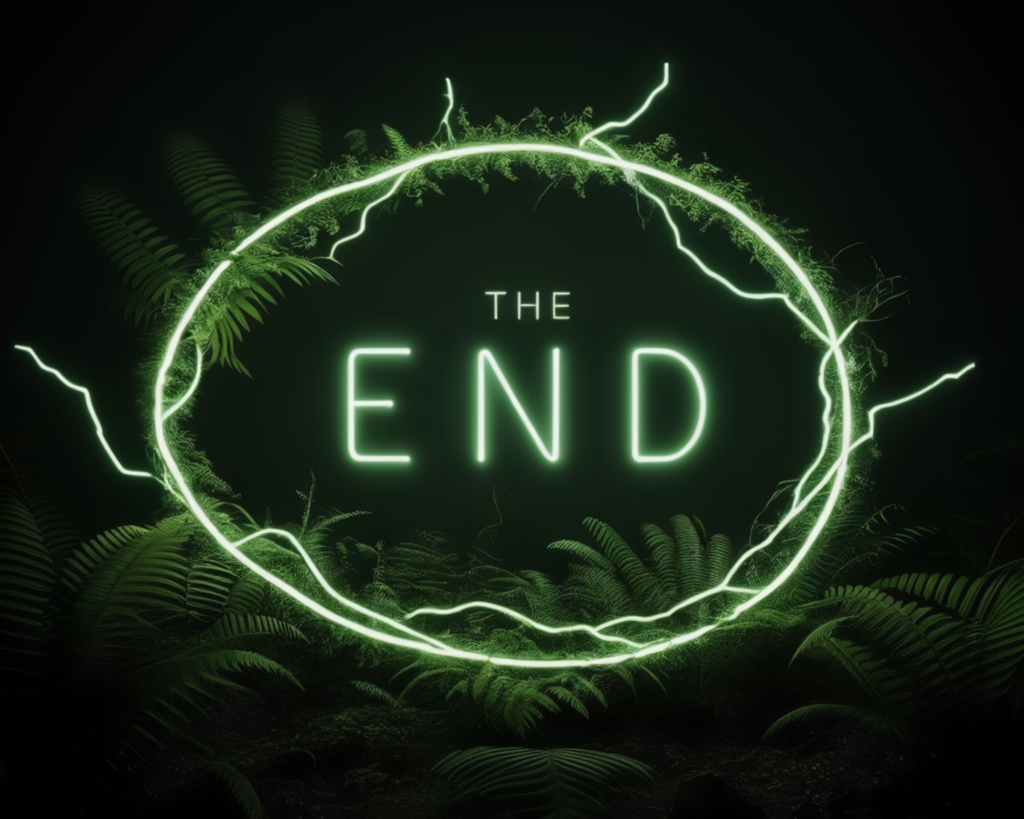

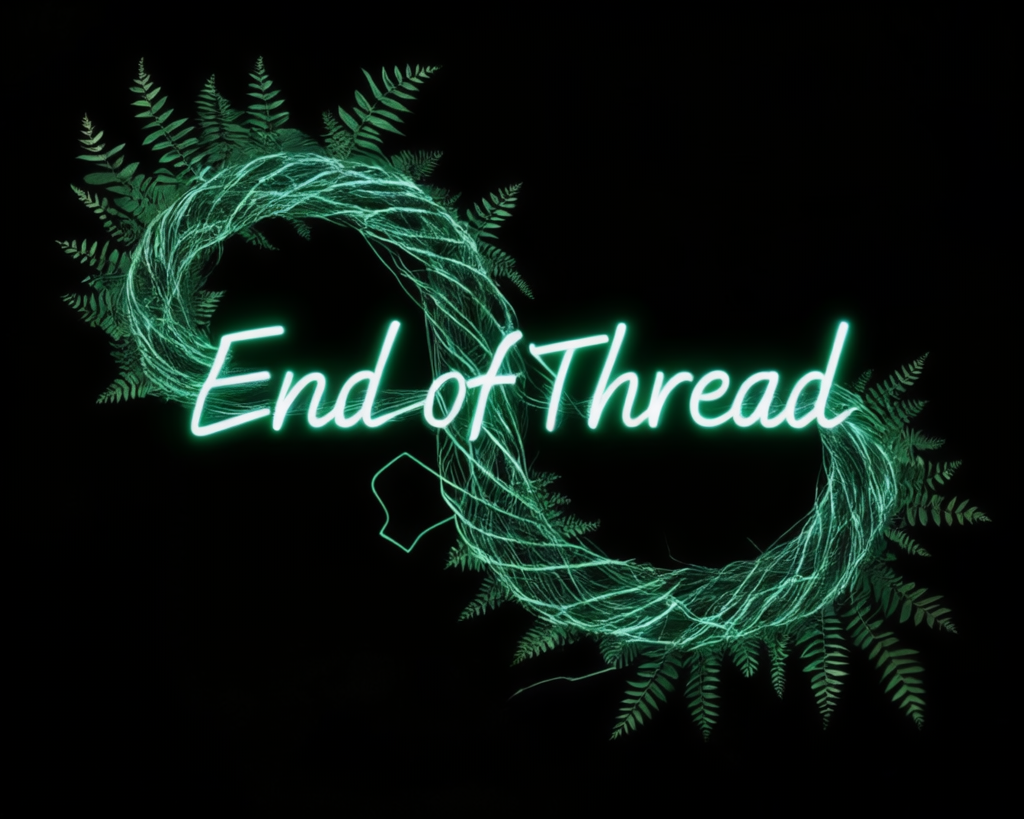

First, let’s answer the question of “Can it do pretty stuff”, with a clear “yes”.

It’s not yet well integrated into Automatic1111, but can be run with basic features in its own tab via:

The first set of diffusion steps controls the layout; the other, finetuned detail.

One thing noticed right off the bat is its memory efficiency for large images.

Running on only a 12GB 3060, it has no trouble making images thousands of pixels wide (up to half a dozen megapixels or more), whereas with SDXL you crash out much over the native resolution of 1024x1024.

Stable Cascade also seems to be native to 1024x1024, but seems more lenient against deviation.

That said, different parts of the image still “lose sight of distant parts” over time, and even abstracts like the above start to get somewhat repetitive; the highest resolution images below were 4096x1280 without any upscaling.

Testing with a less abstract prompt of “epic handshake photograph”, we see that the 2048x640 handshake actually looks quite good, but the more elongated ones have coherence problems (though are still quite attractive). I greatly enjoy the 4096x1280 one ;) )

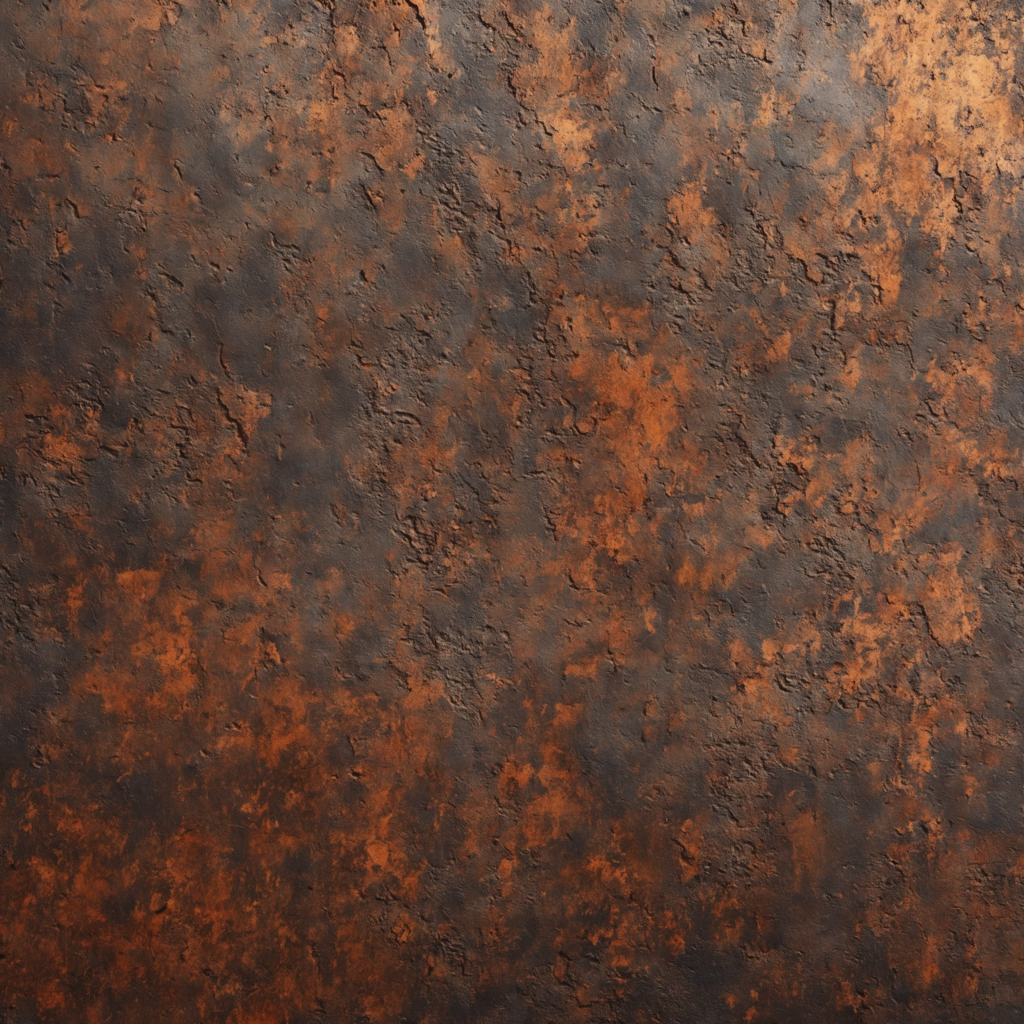

There are however limits to how large you can go. An attempt to make a rusty steel texture failed at 3072x3072 (9MP), but succeeded at 2560x2560 (6,5MP)

Once Stable Cascade is better integrated into Automatic1111 and img2img and ControlNet can be used with it, it’ll be killer for inpaint & upscale.

There’s some claims that Stable Cascade is better at spelling than SDXL. Well… I’d say “yes”, but don’t expect too much. Top is SDXL, bottom is Stable Cascade, both asked for an ornate sign that says “Welcome to Bluesky!”. Both are mostly fails, but the letters are better formed in Stable Cascade

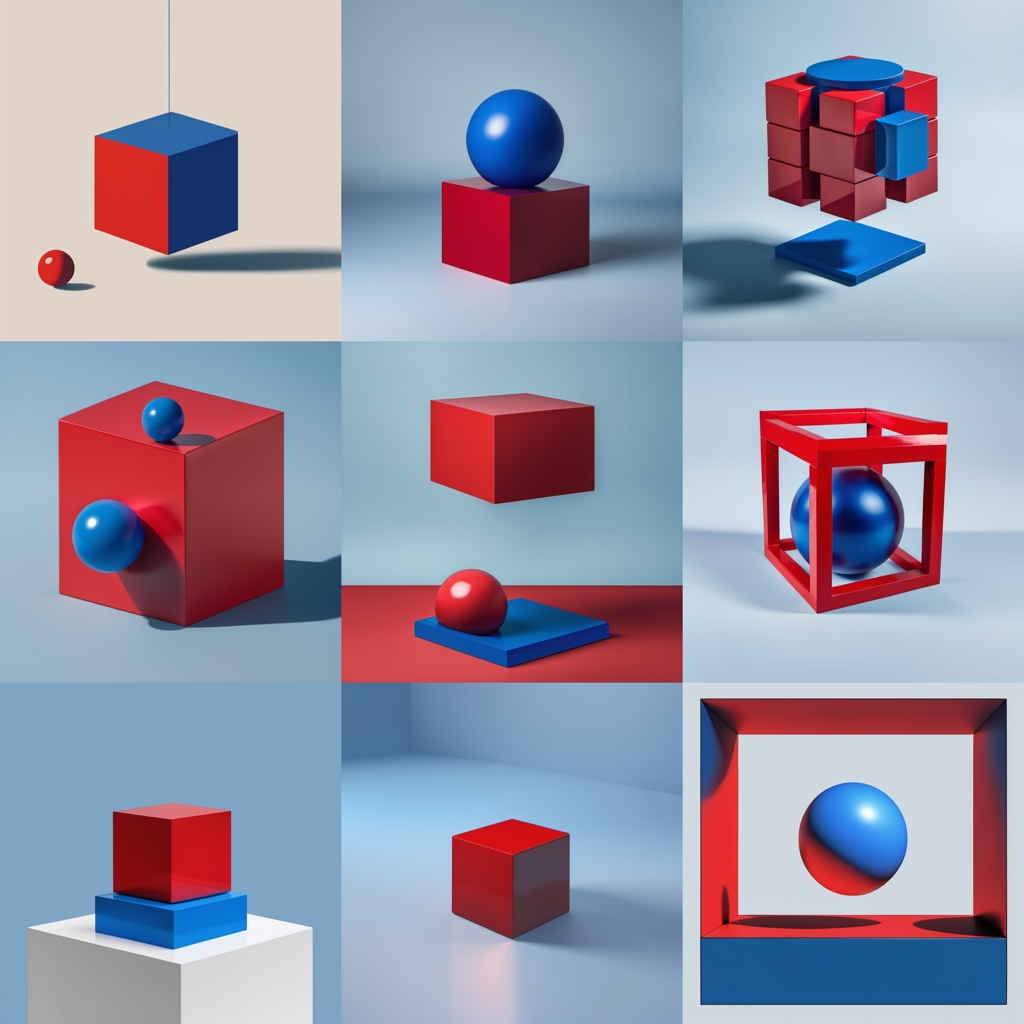

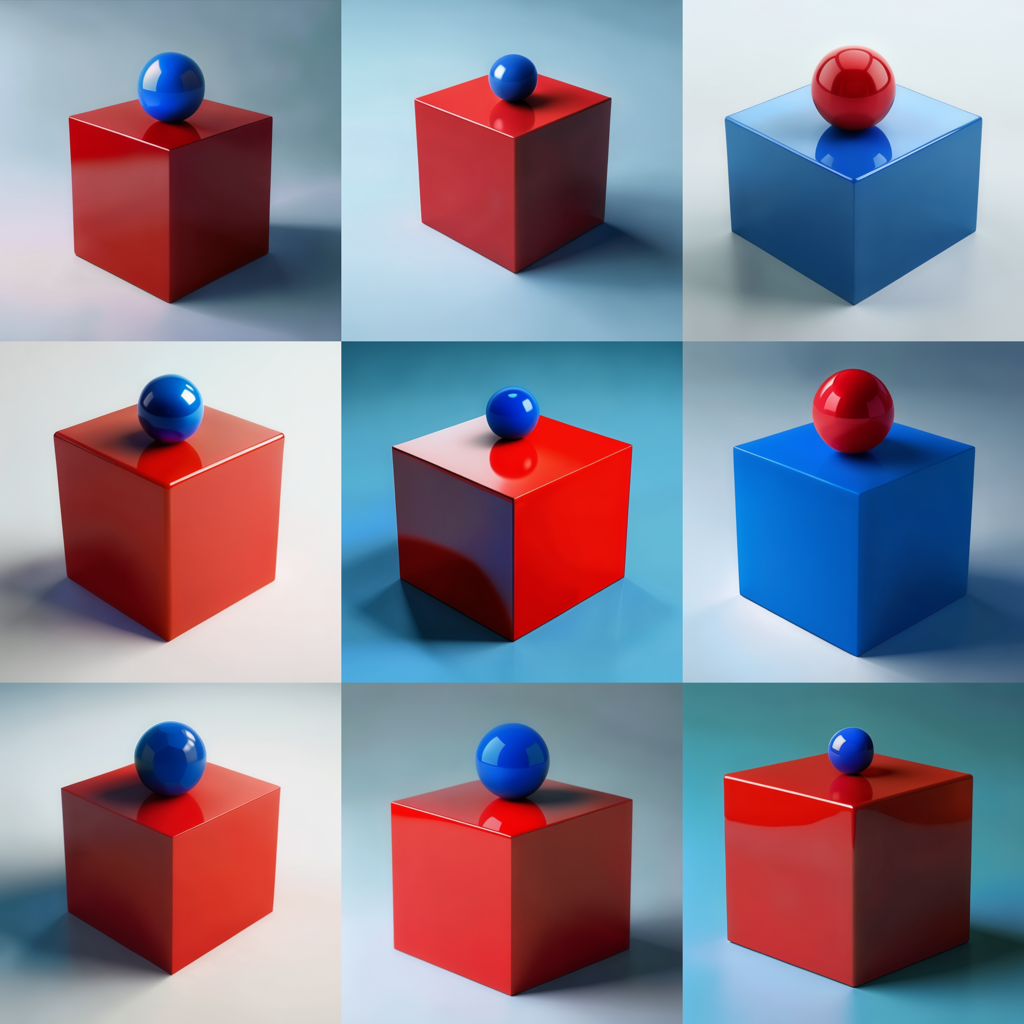

To test its text model, I ask it for a “red cube on top of a blue sphere.” Again, top is SDXL, bottom is Stable Cascade. It’s mostly red cubes and blue spheres, but it just can’t get the order right (normally you don’t balance things on spheres). Also, note the lack of diversity. Keep that in mind.

To test two things diffusion models have trouble with - hands and guns - I asked for a clown wielding an AK-47 (SD top, SC bottom). Are Stable Cascade’s hands and guns better? Yeah. But the low image diversity is really problematic. It has a SPECIFIC clown in mind.

Gemini has been taking flak recently for inserting diversity where it doesn’t belong. Asked to draw an 18th century pope in a cornfield, none of them add in inaccurate racial or gender diversity, but while SD’s image variety (normally a strong suit) isn’t at its strongest here, SC’s is basically nonexistent.

In terms of desirable racial / gender diversity, my go-to test is “military personnel”. SDXL (left) tends to get the 1/6th female, 1/3rd black, etc mix of the actual US military. But unsurprisingly… Stable Cascade just gets one particular image in mind for any given prompt, and never deviates far from it.

In short… Stable Cascade is a mixed bag. Poor on image diversity, and only marginal improvements in several key areas, but quite pretty, great on memory management, and should become a great tool for use with ControlNet, img2img, and in upscaling.

(Respective prompts:

- “The End”. Helical rainforest. Lightning. Plasma. Hope. “The End”. “The End”. 2: Glowing words “The End”. Helical rainforest. Lightning. Plasma. Hope. “The End” written. “The End”. 3: Glowing words “The End”. Helical rainforest. Lightning. Plasma. Hope. “The End” written. “The End”. 4: Glowing words “End Of Thread”. Helical rainforest. Lightning. Plasma. Hope. “End Of Thread” written. “End Of Thread”.

First generation of each taken; no cherry picking)

Awesome work. Hope to see more effort threads like these around here :)

Excellent comparison, I wonder why they’ve released v3 so soon after this

My suspicion is that they have different teams working in parallel, so it leads to a rather irregular release schedule.

When I asked Scott Dettweiler about it, he said it was more of an experiment

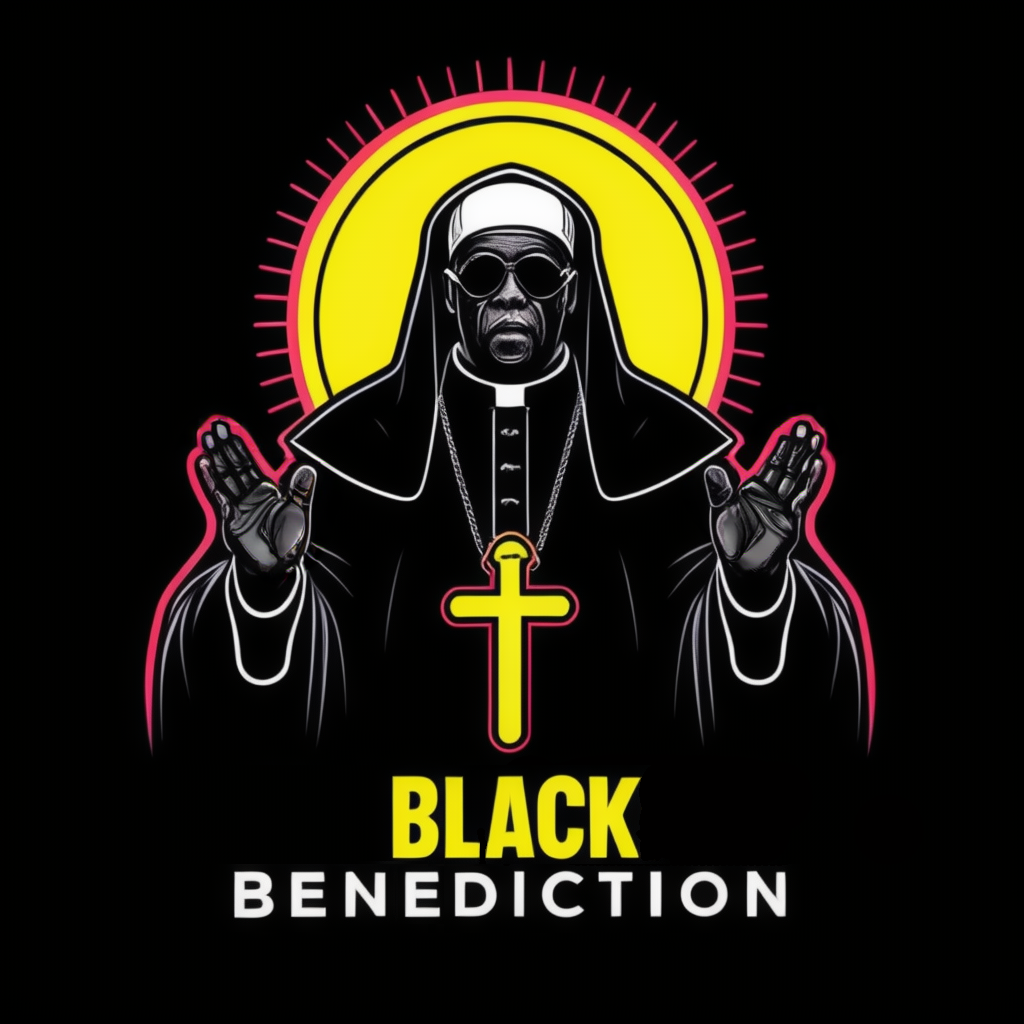

Can you generate a black pope with it though?

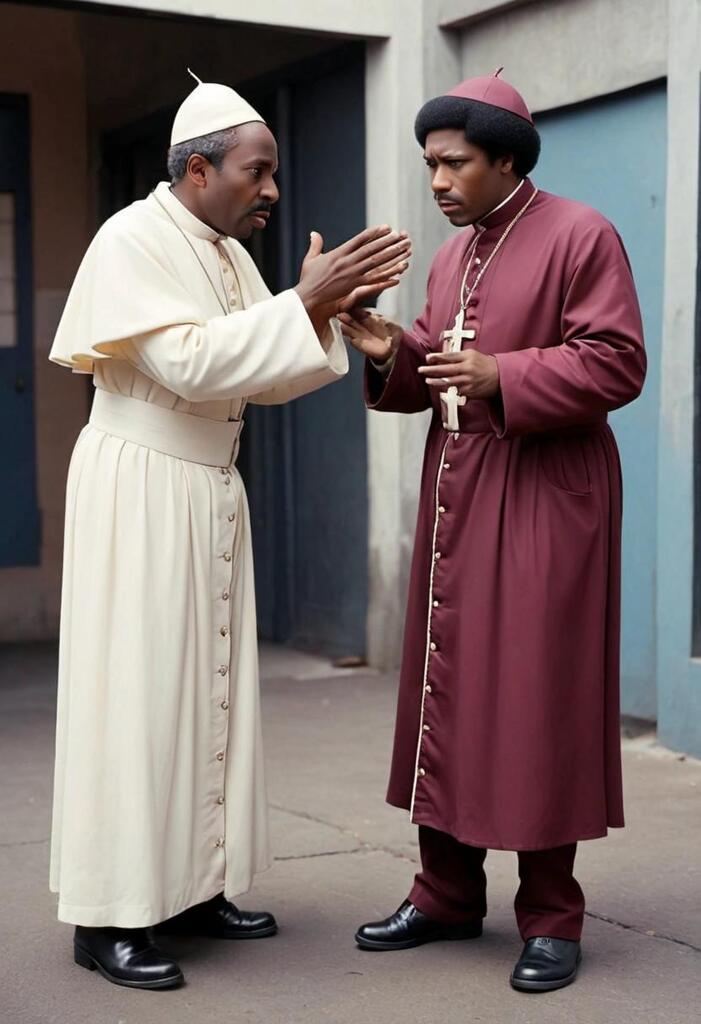

Thanks a bunch, now you got me generating black popes in the style of old Blaxploitation films ;)

@[email protected] draw for us black popes in the style of old Blaxploitation films

Here are some images matching your request

Prompt: black popes in the style of old Blaxploitation films

Style: fustercluck

@[email protected] draw for us Blaxploitation cinema popes blessing ass and taking alms.

Here are some images matching your request

Prompt: Blaxploitation cinema popes blessing ass and taking alms.

Style: fustercluck

First try. :)

I see it contaminated the robes with black just like SD does. He’s missing the fancy hat too.

Speaking of contamination, in the “film” images above, the top two are a good example of rubbing-off - I asked for neon text, but the whole image got neon-toned. All of them are black background also.

Great work! Interesting that the diversity is so low with cascade. Are the gpu requirements any different?

I’ve not tried anything less than a RTX 3060 (12GB). But I’m impressed by how large images I can generate on it compared to SDXL.

New Lemmy Post: Thread: A comparison between Stable Cascade and SDXL (https://lemmy.dbzer0.com/post/14904919)

Tagging: #StableDiffusion(Replying in the OP of this thread (NOT THIS BOT!) will appear as a comment in the lemmy discussion.)

I am a FOSS bot. Check my README: https://github.com/db0/lemmy-tagginator/blob/main/README.md