Enforcing that ban is going to be difficult.

They’re gonna use AI to detect the use of AI.

Not if I use AI to hide my use of AI first!

They are going to implement an AI detector detector detector.

It’s detectors all the way down.

But of course, as a tortoise, you would know that.

Did I seduce you a lot with my hard, polished shell? You can speak freely, nobody will ever know!

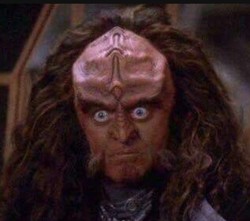

No, it was your intense, Gowron-like stare that truly drilled into my heart.

Glory to seduction! Glory to the empire!

But then they’ll implement an AI detector detector deflector.

Trace busta busta?

This one

Fun fact, this loop is kinda how one of the generative ML algorithms works. This algorithm is called Generative Adversarial Networks or GAN.

You have a so-called Generator neural network G that generates something (usually images) from random noise and a Discriminator neural network D that can take images (or whatever you’re generating) as input and outputs whether this is real or fake (not actually in a binary way, but as a continuous value). D is trained on images from G, which should be classified as fake, and real images from a dataset that should be classified as real. G is trained to generate images from random noise vectors that fool D into thinking they’re real. D is, like most neural networks, essentially just a mathematical function so you can just compute how to adjust the generated image to make it appear more real using derivatives.

In the perfect case these 2 networks battle until they reach peak performance. In practice you usually need to do some extra shit to prevent the whole situation from crashing and burning. What often happens, for instance, is that D becomes so good that it doesn’t provide any useful feedback anymore. It sees the generated images as 100% fake, meaning there’s no longer an obvious way to alter the generated image to make it seem more real.

Sorry for the infodump :3

Spoken like a true AI.

Well the AI-based AI detector isn’t actively making creative people’s work disappear into a sea of gen-AI “art” at least.

There’s good and bad use cases for AI, I consider this a better use case than generating art. Now the question is whether or not it’s feasible to detect AI this way.

Indeed.

I have an Immich instance running on my home server that backs up my and my wife’s photos. It’s like an open source Google Photos.

One of its features is an local AI model that recognises faces and tags names on them, as well as doing stuff like recognising when a picture is of a landscape, food, etc.

Likewise, Firefox has a really good offline translation feature that runs locally and is open source.

AI doesn’t have to be bad. Big tech and venture capital is just choosing to make it so.

Seems that way:

Ouroboros

Just the threat of being able to summarily remove AI content and hand out account discipline will cut down drastically on AI and practically eliminate the really low effort ‘slop’, it’s not perfect but it’s damn useful.

It’s also going to make it really easy to take down the content you don’t like, just accuse it of being AI and watch the witch hunting roll in. I’ve seen plenty of examples of traditional artists getting accused of using AI in other forums, I don’t imagine this will be any different.

I got accused of being an AI for writing a comment reply to someone which was merely informative, empathic and polite!

People already mass report to abuse existing AI moderation tools. It’s already starting to be accounted for and I can’t imagine it so much as slowing down implementing an anti AI rule if I’m being honest.

The ban doesn’t need a 100% perfect AI screening protocol to be a success.

Just the fact that AI is banned might appeal to a wide demographic. If the ban is actually enforced, even in just 25% of the most blatant cases, it might be just the push a new platform needs to take off.

Just because something might be hard means we should give up before even trying?

Of course not, but I don’t think anybody suggested that.

Only if we let it be. There’s no technical reason why the origin of a video couldn’t have a signature generated by the capture device, or legally requiring AI models to do the same for any content they generate. Anything without an origin sticker is assumed to be garbage by default. Obviously there would need to be some way to make captures either anonymous or not at the user’s choice, and nation states can evade these things with sufficient effort like they always do, but we could cut a lot of slop out by doing some simple stuff like that.

“Legally” doesn’t mean shit if it’s not enforceable. Besides, removing watermarks is trivial.

There is no technically rigorous way to filter AI content, unfortunately.

while a phone signing a video to show that it was captured with the camera is possible, it will be easy too to fake the signature. all it would take would be a hacked device to steal the private key. and even if apple/google/samsung have perfectly secure systems to sign the origin of the video, there would be ton of cheaper phones that would likely won’t.

So what’s the angle? The Internet is getting flooded by AI slop. AI needs fresh REAL content to train with. That’s the angle. You are there to provide frsh amd original content to feed the AI.

Thats a very good point and probably exactly the idea. Dorsey has always just been an actor that says one thing and thinks another.

Omfg this is so awful it’s likely either accidental truth or a damn good prediction

Oh god, we have an AI incest flood ahead of us don’t we?

Maybe the angle is just that people hate Ai? Seriously, especially young people…

Is you a youngin? Cause no product under the control of a billionaire is free. If it’s free, you are the product. AI is hated and they’re trying to make a product using that hate as a basis for target audience

Nothing is free, If they can sell ads to people because they don’t like AI, they will. They’re rebooting it with about the same intent as it was originally designed to have.

Again with this idea of the ever-worsening ai models. It just isn’t happening in reality.

The same reality where GPT5’s launch a couple months back was a massive failure with users and showed a lot of regression to less reliable output than GPT4? Or perhaps the reality where most corporations that have used AI found no benefit and have given up reported this year?

LLMs are good tools for some uses, but those uses are quite limited and niche. They are however a square peg being crammed into the round hole of ‘AGI’ by Altman etc while they put their hands out for another $10bil - or, more accurately while they make a trade swap deal with MS or Nvidia or any of the other AI orobouros trade partners that hype up the bubble for self-benefit.

Not only it is actually happening, it’s actually well researched and mathematically proven.

It has been proven over and over that this is exactly what happens. I don’t know if it’s still the case, but ChatGPT was strictly limited to training data from before a certain date because the amount of AI content after that date had negative effects on the output.

This is very easy to see because an AI is simply regurgitating algorithms created based on its training data. Any biases or flaws in that data become ingrained into the AI, causing it to output more flawed data, which is then used to train more AI, which further exacerbates the issues as they become even more ingrained in those AI who then output even more flawed data, and so on until the outputs are bad enough that nobody wants to use it.

Did you ever hear that story about the researchers who had 2 LLMs talk to each other and they eventually began speaking in a language that nobody else could understand? What really happened was that their conversation started to turn more and more into gibberish until they were just passing random letters and numbers back and forth. That’s exactly what happens when you train AI on the output of AI. The “AI created their own language” thing was just marketing.

JPEG artifacts but language

You may want to use AI’s some time for the sake of science. They are many times worse than a year ago.

People really latched onto the idea, which was shared with the media by people actively working on how to solve the problem

It would be great if ALL social media platforms banned that garbage.

Or just had a filter to hide it. I don’t feel like banning something from everyone just because I personally don’t like it.

I would and I’d have no problem with it at all. If people want AI slop, they can go find it where it is allowed.

There’s literally an app for it called Sora.

Hmmmm, must not be American.

I think such a filter wouldn’t function well enough to keep up, such is the case with search engines which offer the feature, and instead a 0 tolerance ban would be the only effective method.

Zero tolerance ban still requires a method of detecting AI content in order to enforce said ban. Having such detection system in place would then just as well give people the option to choose for themselves whether they want to see such content or not. Ofcourse such filter isn’t 100% accurate but neither is a total ban. Some of that content will always get through.

Humans can make reports to contribute to banning accounts and even IPs that prove problematic.

Humans contributing tags for filters would be like fighting the tide with a spoon.

Humans contributing tags for filters would be like fighting the tide with a spoon.

Isn’t that what you’re literally advocating for here? I don’t see the practical difference between having users reporting AI content versus users reporting AI content that isn’t correctly labeled as such.

I stand behind what I said. Give people the option to filter it out of their own feeds if they want to. I don’t wish to push my own content preferences onto people with different tastes. Curating your own feed is the way to go. Not top down control from the tech companies themselves.

You don’t see a practical difference between tagging an image and removing the source of the images? Really? You can’t figure it out when its been spelled out for you? You, specifically you, have a hard time understanding? You’re not piecing the mystery together, yet? Your wheels are turning on this colossal conundrum?

Hey bud, mull it over for a bit and let me know if you figure it out. Smh.

Nor can I figure out this level of unprovoked hostility toward a complete stranger, but looking through your moderation history made it pretty clear that it’s not personal but you’re just lashing out to cope with whatever personal issues you’re dealing with. I don’t like it but get it. I genuinely hope you find some peace, but I don’t want anything more to do with this, so don’t spend your time writing a response. I’ve had enough with trying to deal with mean people online.

personally, I would ban it at the federal level and anytime you use it someone shows up at your house and destroys everything and throws away your computer. and then you go to jail. and then anyone who tries to visit you in jail gets punched in the face. and you have to eat poop in jail

Prohibition of something easy to

makerun at home? An interesting choice.yea

Loops ftw

What’s Loops?

A Fediverse platform for short form video, made by the guy who makes Pixelfed

Not really just short form, it’s more of a take on video feeds rather than just the limited length quickcontent Vine was famous about.

Obviously the focus is still on short(ish) content format, but I see more and more people transition to longer videos to deliver content. On YT/Facebook most videos I see nowadays are 10min or above.

Vine, but federated!

More tiktok than vine, really

You’re not wrong, but an arbitrary maximum video length is the least of my problems with a Dorsey product

But does it ban AI content?

That would be based on the server’s policies, same as Lemmy or Mastodon.

I’d trust a federated environment a billion times more than anything Jack Dorsey is doing

Yeah, will never understand why these big billionaires keep taking these “we are for the people” stances, but are still trying to spin up these same ol for-profit, centralized products. If they really cared, they’d use that money to help nonprofits or decentralized services and stay out of the damn way.

It’s because it’s profitable, that’s why they do it. As long as they don’t Elon Musk, most people either don’t know who these people are or don’t care. And if they do go full EM, then most people still don’t care and it’s still profitable.

Yeah, they want money and clout

TikTok but without users.

TIL. Thanks friend.

Why would anyone touch any garbage this guy produces?!

Banned for now…

Huh?

don’t care dorsey is the problem

Another walled garden that will fracture the system even more and give even more credit to the open social web.

It is on nostr, I don’t like the protocol very much but it is decentralised

Is it decentralized like the fediverse or ‘decentralized’ in theory like bluesky?

Bluesky is decentralized, its just decentralized in a different way than Fediverse apps. A lot of fediverse people assume all decentralization would look like ActivityPub, so they just say its fake decentralization rather than learning how it works. (I know because I used to do the same, then I realized how little I understood it from this great blog post, and have since learned a lot more about it.)

There is already alternative infrastructure available (i.e. Blacksky and a variety of other applications hosted using ATProto (you can see a few here: https://bsky.social/about).

You can use any of these apps while maintaining full control of your own data by running your own PDS, or using any community maintained PDS. If you already have an account on the Bluesky PDS, you can migrate it, retaining all of your data. If you dont feel like migrating yet, you can also just export your rotation key, which would allow you to maintain control of your account even in the event that the Bluesky PDS does become evil or something.

Speaking of ATProto, sprk.so is a similar upcoming app, although I think its going to be more similar to TikTok than Vine.

Yes.

No

Maybe?

I don’t know, can you repeat the question?

You’re not the boss of me now!

He’s on third.

It is actually decentralised as far as I can tell, although I would like to point out that bluesky is also decentralised, while bsky.app has dominant market share, nothing stops anyone from hosting their own, completely independent, instance. Some have already done so.

And even with the large majority using bsky.app, the true decentralized nature of Bluesky is anyone can host the data server that contains the data for their account. Even if you keep using bsky.app as your frontend, your data can be kept on a self hosted PDS.

It still can’t function without an appview so PDS is not decentralized, but stuff like blacksky is. Hence people being reachableon some appviews and not others now, because of different moderation decisions.

Nostr is part of the fediverse, as is blueksy’s ATProto. Neither Mastodon/LEmmy (ActivityPub) nor Bluesky (ATproto) are decentralized. They are poly-centric, and calling Bluesky poly-centric is still a little bit of a fib. NOSTR is truly decentralized. Here on Lemmy if the admin of piefed.world gets mad at the admin of lemmy.ca we couldnt’ communicate anymore unless one of us got a new account. On NOSTR if the relay owner no longer wanted my content, you can connect to a different relay that does. (For example)

Except it’s not a walled garden, it’s built on nostr.

Jack Dorsey once again singing “but baby I’ve changed!”

hard pass from me.

https://divine.video/ - For people who want to see for themselves.

Also the android app from the TechCrunch article, seems like it was nuked though: https://divine.b-cdn.net/app-release.apk

In the main picture, about half of those videos use filters that do something based on the location of the person’s head. Unless they’ve changed the definition since I went to college, that would be classified as a type of computer vision, aka AI.

Yeah they just mean the more layperson understanding of AI as in AI-generated content or as YouTube Studio dubs it: “synthetic media” (pretty good term imo)

Basically just stuff that was “generated”.

I’m sure transformative use of ML like filters etc. would be fine. Even incorporation of generated elements in otherwise a normal video would be fine.

So “I know it when I see it” rules, rather than anything rigorously defined.

Assuming this gets any traction at all the witch hunts will be rampant.

Honestly, I don’t really trust any cryptobro, I see them on similar level as AI-bros

Lemmy hates everything and everyone it seems.

Jack Dorsey more than deserves the hate and I’m happy to discuss it with you.

We like our negativity here - it’s still okay to disagree and be positive though!

That being said, Dorsey was fine selling his last media company to the highest-bidding fascist. Chances are he‘ll do it again.

Personally, I won’t use any social media that isn’t billionaire-proof.

Valid criticism isn’t baseless hate. And, is better to be skeptical than blindly complacent.

Weird Al is gonna be devastated!

What’s that guy need so much water for anyway?!?!?

Well, my kind of humor.

Sure pal, sure. That’s what all said, no AI, and look now.

Can’t wait whe they will offer him 1bilion or scare him to death to put AI on it.